EFKスタックをdocker-composeで構成するEFK Compose

EFK Compose

Docker Logging Efk Composeで、docker-composeを使ったELKスタックの例がある。

docker-compsoe.yml

1 | version: '2' |

fluentd/conf/fluent.conf

1 | # fluentd/conf/fluent.conf |

fluentd/Dockerfile

1 | # fluentd/Dockerfile |

ElasticSeearchがcode78でエラーになる!?

EKF Composeを起動すると、ElasticSearchがcode 78エラーで停止してしまう。least one of [discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configuredというメッセージが出ている

discoveryの設定が必要

このパラメーターはElastickSearchクラスタに関する設定

ElasticSearch7.X系以降はこの設定が必須になっているようだ。

| パラメーター | 意味 |

|---|---|

| discovery.seed_hosts | 設定ベースのseed_hosts |

| discovery.seed_providers | ファイルベースのseed_hosts |

| cluster.initial_master_nodes | クラスターのマスターノード |

1 | elasticsearch_1 | {"type": "server", "timestamp": "2021-08-15T01:00:46,290Z", "level": "INFO", "component": "o.e.n.Node", "cluster.name": "docker-cluster", "node.name": "93ed1cfad299", "message": "initialized" } |

マスターノードのみ設定する

複数台構成ではないので、マスターノードのみ設定する。

1 | elasticsearch: |

code 78はでなくなったがmaster not discovered yet,というメッセージが…

1 | elasticsearch_1 | {"type": "server", "timestamp": "2021-08-15T01:56:08,340Z", "level": "WARN", "component": "o.e.c.c.ClusterFormationFailureHelper", "cluster.name": "docker-cluster", "node.name": "64a558eac1e4", "message": "master not discovered yet, this node has not previously joined a bootstrapped (v7+) cluster, and this node must discover master-eligible nodes [elasticsearch] to bootstrap a cluster: have discovered [{64a558eac1e4}{2UB6bFD4QUSAu9rXFwZFhw}{q72ZliAYQOq5r_Zq7-vFxw}{172.19.0.2}{172.19.0.2:9300}{cdfhilmrstw}{ml.machine_memory=8348790784, xpack.installed=true, transform.node=true, ml.max_open_jobs=20, ml.max_jvm_size=4177526784}]; discovery will continue using [127.0.0.1:9300, 127.0.0.1:9301, 127.0.0.1:9302, 127.0.0.1:9303, 127.0.0.1:9304, 127.0.0.1:9305] from hosts providers and [{64a558eac1e4}{2UB6bFD4QUSAu9rXFwZFhw}{q72ZliAYQOq5r_Zq7-vFxw}{172.19.0.2}{172.19.0.2:9300}{cdfhilmrstw}{ml.machine_memory=8348790784, xpack.installed=true, transform.node=true, ml.max_open_jobs=20, ml.max_jvm_size=4177526784}] from last-known cluster state; node term 0, last-accepted version 0 in term 0" } |

ElasticSearch自体の応答はある。

標準のノード名がランダムな値となっているので、ノード名とクラスター名を明示的に設定する。

1 | elasticsearch: |

設定後に各ポートにアクセスすると

ElasticSearch

ElasticSearch(localhost:9200)にアクセスすると、設定したノード名やクラスター名が表示されている。

Kibana

Kibana(localhost:5601)へアクセスするとKibanaのUIが表示される。

Fluent-bitからがElasticSearchにせつぞくできていない・・・Faraday::Error::ConnectionFailed

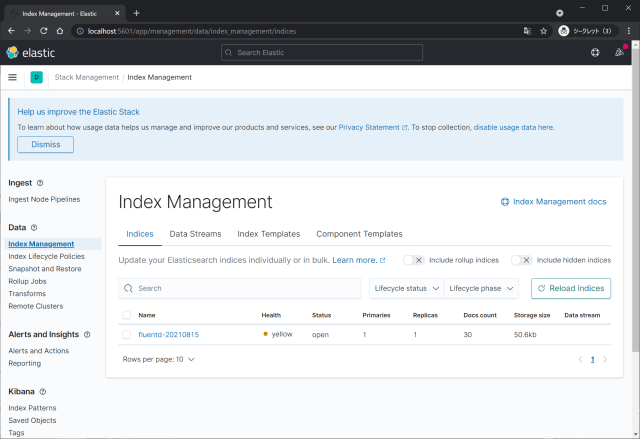

正常にログが転送されると、KibanaのIndexManagementにfluentd-YYYYMMDDの形式でインデックスが登録される。

しかし、インデックスが存在しない状態…ログを確認すると、以下のエラーが出ている。

1 | fluentd_1 | 2021-08-15 03:12:14 +0000 [warn]: #0 failed to flush the buffer. retry_time=13 next_retry_seconds=2021-08-15 04:17:15 +0000 chunk="5c98f797a0fdcfe5f8155507b4d141b4" error_class=NameError error="uninitialized constant Faraday::Error::ConnectionFailed" |

fluent.confではコンテナ名を指定する

いろいろ思考錯誤した結果、fluent.confのhostではコンテナ名を明示する必要がある。

1 | # fluentd/conf/fluent.conf |

Kibanaでインデックスるが作成されている

ログインすると前とは異なりいきなりログイン後画面に。

左上のメニューからStack Managemetを選択。

Index Managementを選択。fluentd-YYYYMMDDの形式でインデックスが登録されている。